The Hadoop Cluster Administration training course is designed to provide knowledge and skills to become a successful Hadoop Architect. It starts with the fundamental concepts of Apache Hadoop and Hadoop Cluster. It covers topics to deploy, configure, manage, monitor, and secure a Hadoop Cluster. The course will also cover HBase Administration. There will be many challenging, practical and focused hands-on exercises for the learners. By the end of this Hadoop Cluster Administration training, you will be prepared to understand and solve real world problems that you may come across while working on Hadoop Cluster.

Hadoop 2.0 Developer training at ISEL Global will teach you the technical aspects of Apache Hadoop, and you will obtain a deeper understanding of the power of Hadoop. Our experienced trainers will handhold you through the development of applications and analyses of Big Data, and you will be able to comprehend the key concepts required to create robust big data processing applications. Successful candidates will earn the credential of Hadoop Professional, and will be capable of handling and analysing Terabyte scale of data successfully using MapReduce.

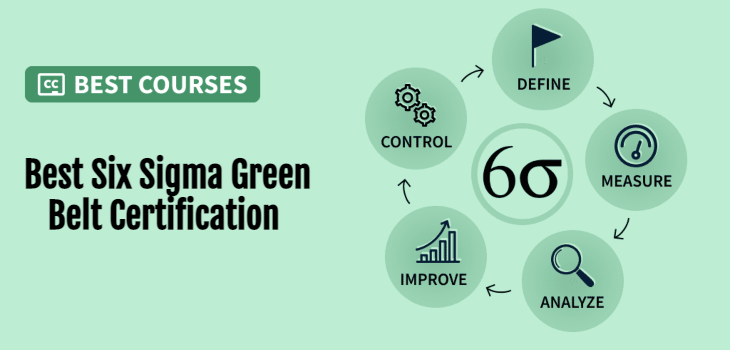

| Introduction to QUALITY and LEAN SIX SIGM | History of Quality (Deming, Juran, Ishikawa, Taguchi, etc.) | ||||||

| Evolution of Six Sigma | |||||||

| Evolution of LEAN manufacturing | |||||||

| Six Sigma – philosophy and objectives | |||||||

| Deliverables of a Lean Six Sigma Project | |||||||

| Six Sigma Roles & Responsibilities | |||||||

| LEAN SIX SIGM frame work and how dose it work | Meanings of Six Sigma | ||||||

| Data driven decision making | |||||||

| The Problem Solving Strategy Y = f(x) | |||||||

| Understanding How DMAIC and DMADV frame work | |||||||

| Documenting STAKEHOLDER REQUIREMENTS | SIPOC - Identifying stakeholders and customers | ||||||

| Determining critical requirements | |||||||

| Identifying performance metrics | |||||||

| QFD - Quality Function Deployment | |||||||

| Voice of the Customer, Business and Employee | |||||||

| Market research | |||||||

| DEFINE PHASE tools | Project selection , Project prioritization , KANO Model , Juran's customer needs | ||||||

| Preparing project charter | |||||||

| Initiating teams, Stages of team evolution | |||||||

| Mapping As - is process flow | |||||||

| Understanding Critical to Quality (CTQ) and VOC to CTQ drill down | |||||||

| Cost of Poor Quality (COPQ) and its types | |||||||

| PROJECT & TEAM MANAGEMENT Fundamentals | Enterprise wide View , Team Formation , Team Facilitation , Team Dynamics | ||||||

| Time Management For Teams | |||||||

| Team Decision making Tools | |||||||

| Management and Planning Tools | |||||||

| Conflict Management | |||||||

| Team Performance Evaluation and Rewards | |||||||

| Project management plan and Baselines | |||||||

| Project Tracking | |||||||

| MEASURE PHASE tools | Understanding of Data , Types of Data , Dimensions of Data | ||||||

| Population Data (VS) Sample data , Sampling Techniques | |||||||

| Sample Size calculation, Confidence level and confidence interval | |||||||

| Data collection template and techniques | |||||||

| Measurement system analysis - Calibration , Gauge R&R , KAPPA analysis | |||||||

| Central Limit Theorem | |||||||

| Stability and Distribution types | |||||||

| Data Transformation techniques | |||||||

| Descriptive statistics (Measure of central tendency & Measure of variation) | |||||||

| Base Line sigma calculation (DPU, DPMO, Cp,Cpk) | |||||||

| ANALYZE PHASE tools | 7 QC Tools (Histogram, Cause-and-Effect Diagrams / Fishbone Diagram, Pareto charts, Control Charts, Scatter diagrams, Process Mapping, Check Sheet) | ||||||

| 5 Why Analysis , Control impact matrix, Affinity clustering | |||||||

| Data visualization - Box Plat , Tree Diagram, Multi Vari charts Trends and comparison charts | |||||||

| Hypothesis testing - Parametric test (Continuous Data , Discrete Data) Alpha & Beta errors | |||||||

| Non Parametric test (for Non Normal test) | |||||||

| Multivariate Analysis - Discriminant Analysis, Factor Analysis, Cluster Analysis, Multidimensional Scaling, Correspondence Analysis, Conjoint Analysis, Canonical Correlation | |||||||

| Structural Equation Modeling , Principal component analysis | |||||||

| Multivariate Analysis of Variance (MANOVA) | |||||||

| DMADV TOOLS | Need for DMADV frame work and its history | ||||||

| Introduction to "Design Thinking" | |||||||

| TRIZ methods of Solution generation | |||||||

| Prioritization Matrix | |||||||

| Risk Mitigation techniques | |||||||

| Creativity tools | |||||||

| Analyzing and verifying design | |||||||

| Reliability statistics | |||||||

| LEAN METHODOLOGY | Fundamentals of lean , 5 principles of lean | ||||||

| Value add (VS) non value add and 8 Types of deadly waste | |||||||

| Value stream mapping - Lead Time , Processing Time, cycle time, Turn around Time, First Pass yield , Rolled Throughput Yield, Process cycle efficiency | |||||||

| Heijunka - Leveling, Sequencing, Stability, TAKT time | |||||||

| Jidoka, Poke Yoke, Autonomation, Built-in Quality, Stopping at Abnormalities | |||||||

| Visual Management, 5 S implementation | |||||||

| JIT (Just in time), Critical level of replenishment , Supermarket or consumption based replenishment, Kanban | |||||||

| Kaizen, LEAN action work out (AWO) | |||||||

| IMPROVE PHASE tools | Solution design matrix, Brain storming | ||||||

| Failure Mode effect analysis | |||||||

| Regression techniques - Simple linear regression , Multiple regression | |||||||

| Non Linear , Binary Logistic, Nominal Logistic, Ordinal Logistic regression | |||||||

| Design of experiments (DOE), Taguchi robustness concepts | |||||||

| Full Factorials, Fractional Factorials, Screening Experiments, Response Surface Analysis , EVOP (Evolutionary operations methodology) Mixture Experiments | |||||||

| PDCA - Plan, Do , Check , Act | |||||||

| Theory of Constraints (TOC) | |||||||

| Introduction to Digital transformation , Industry 4.0 , RPA | |||||||

| Basics of solution generation through "Design Thinking Concepts" | |||||||

| "Six Thinking Hats" - Dr. Edward de Bono | |||||||

| CONTROL PHASE tools | Statistical process control | ||||||

| Control plan for sustaining benefits | |||||||

| Introduction to Control Charts and Types of Control Charts | |||||||

| PROJECT DOCUMENTATION and Sustenance | CBA - Cost benefit analysis | ||||||

| Project documentation as per DMAIC frame work | |||||||

| Horizontal deployment of lessons learned | |||||||

| Inculcating LEAN SIX SIGMA DNA in all layers of organization | |||||||

From the course:

- Understand Big Data and the various types of data stored in Hadoop

- Understand the fundamentals of MapReduce, Hadoop Distributed File System (HDFS), YARN, and how to write MapReduce code

- Learn best practices and considerations for Hadoop development, debugging techniques and implementation of workflows and common algorithms

- Learn how to leverage Hadoop frameworks like ApachePig™, ApacheHive™, Sqoop, Flume, Oozie and other projects from the Apache Hadoop Ecosystem

- Understand optimal hardware configurations and network considerations for building out, maintaining and monitoring your Hadoop cluster

- Learn advanced Hadoop API topics required for real-world data analysis

- Understand the path to ROI with Hadoop

From the workshop:

- 3 days of comprehensive training

- Learn the principles and philosophy behind the Apache and Hadoop methodology

- Dummy projects to work and gain practical knowledge

- Earn 24 PDUs certificate

- Downloadable e-book

- Industry based case studies

- High quality training from an experienced trainer

- Course completion certificate after successful passing the examination

This course is best suited to systems administrators, windows administrators, linux administrators, Infrastructure engineers, DB Administrators, Big Data Architects, Mainframe Professionals and IT managers who are interested in learning Hadoop Administration.

List of people who can go for course:

-

Architects and developers who design, develop and maintain Hadoop-based solutions

-

Data Analysts, BI Analysts, BI Developers, SAS Developers and related profiles who analyze Big Data in Hadoop environment

-

Consultants who are actively involved in a Hadoop Project

-

Experienced Java software engineers who need to understand and develop Java MapReduce applications for Hadoop 2.0

.jpg)

.webp)